SOFTWARE ENGINEER

NEW MEDIA ARTIST

Electronic Production & Design, Berklee College of Music

Interactive Telecommunications, New York University

New York, NY

Contact - pls9788@nyu.edu - GitHub

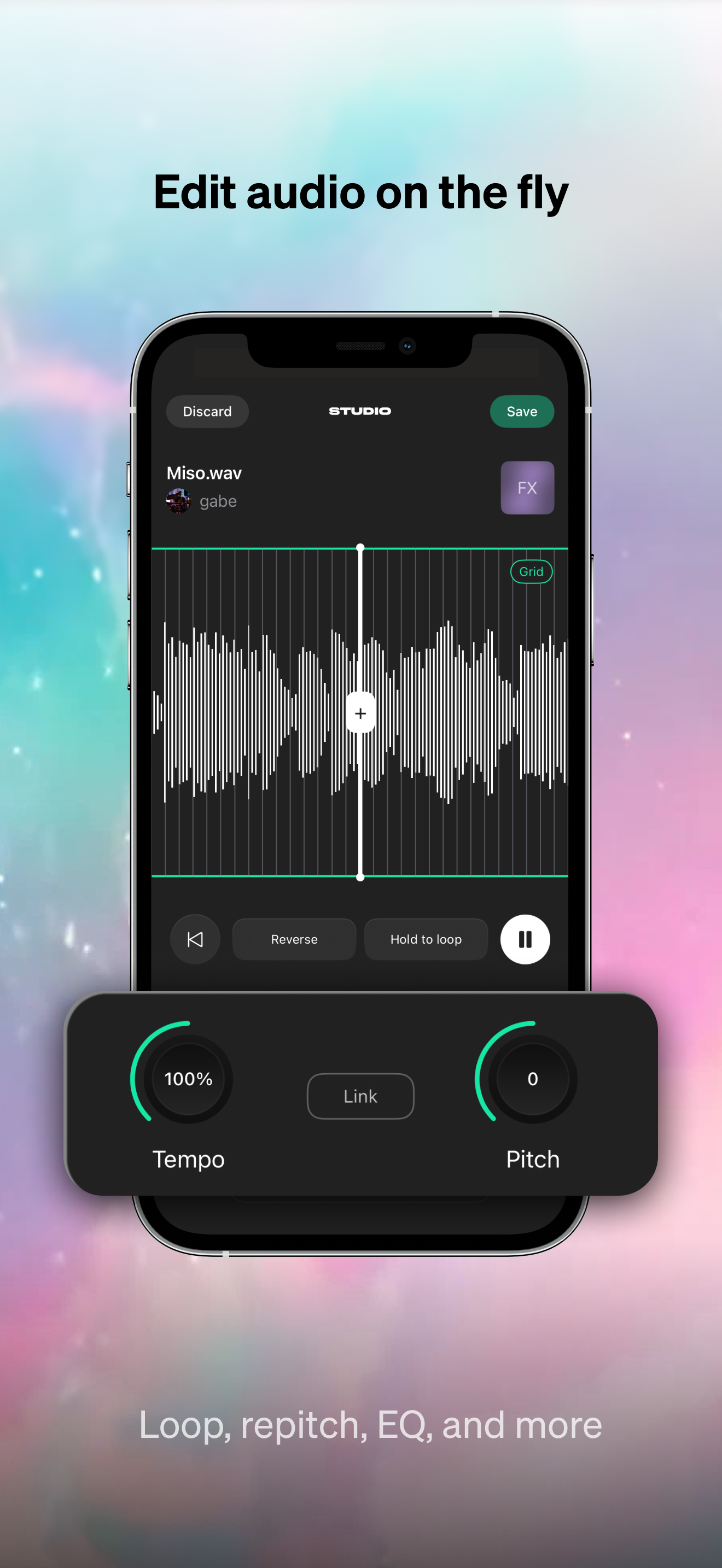

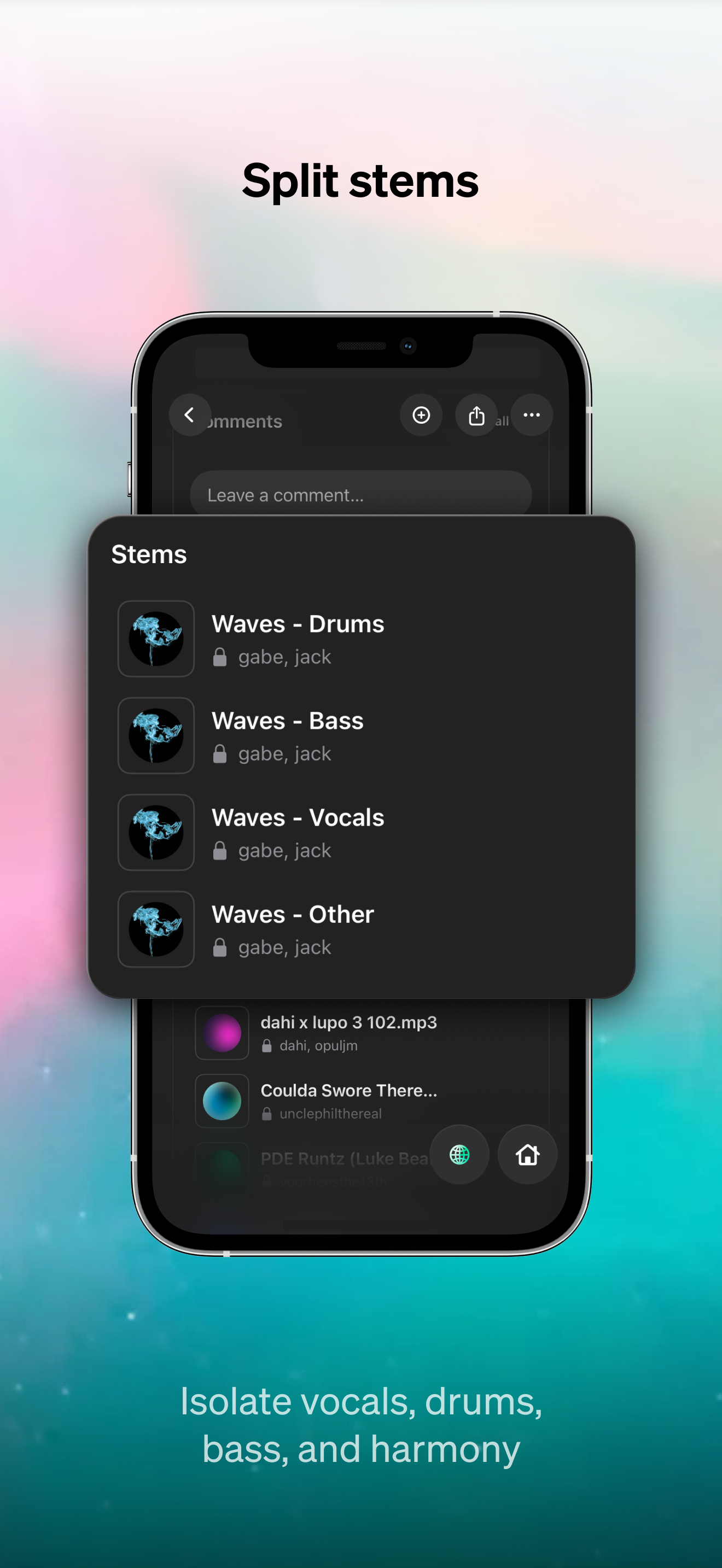

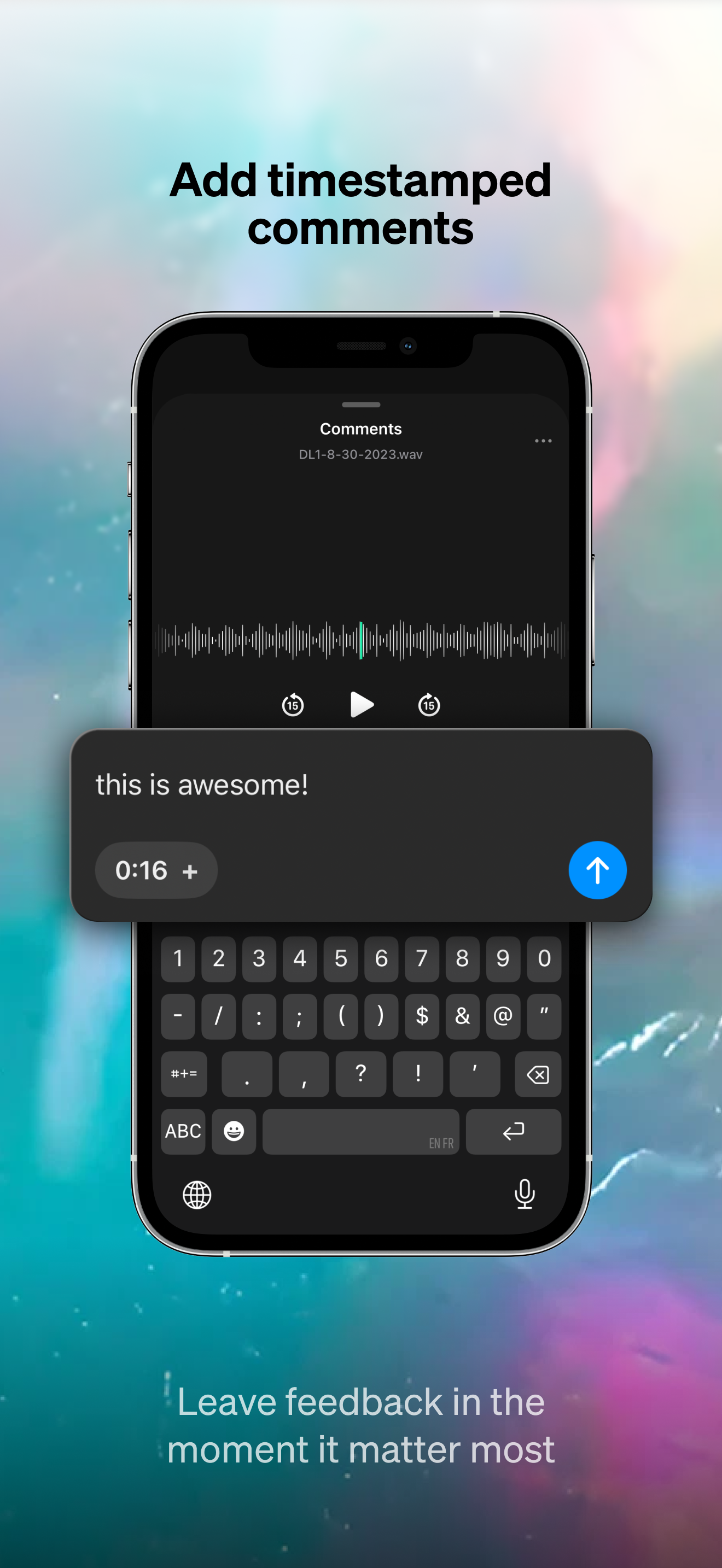

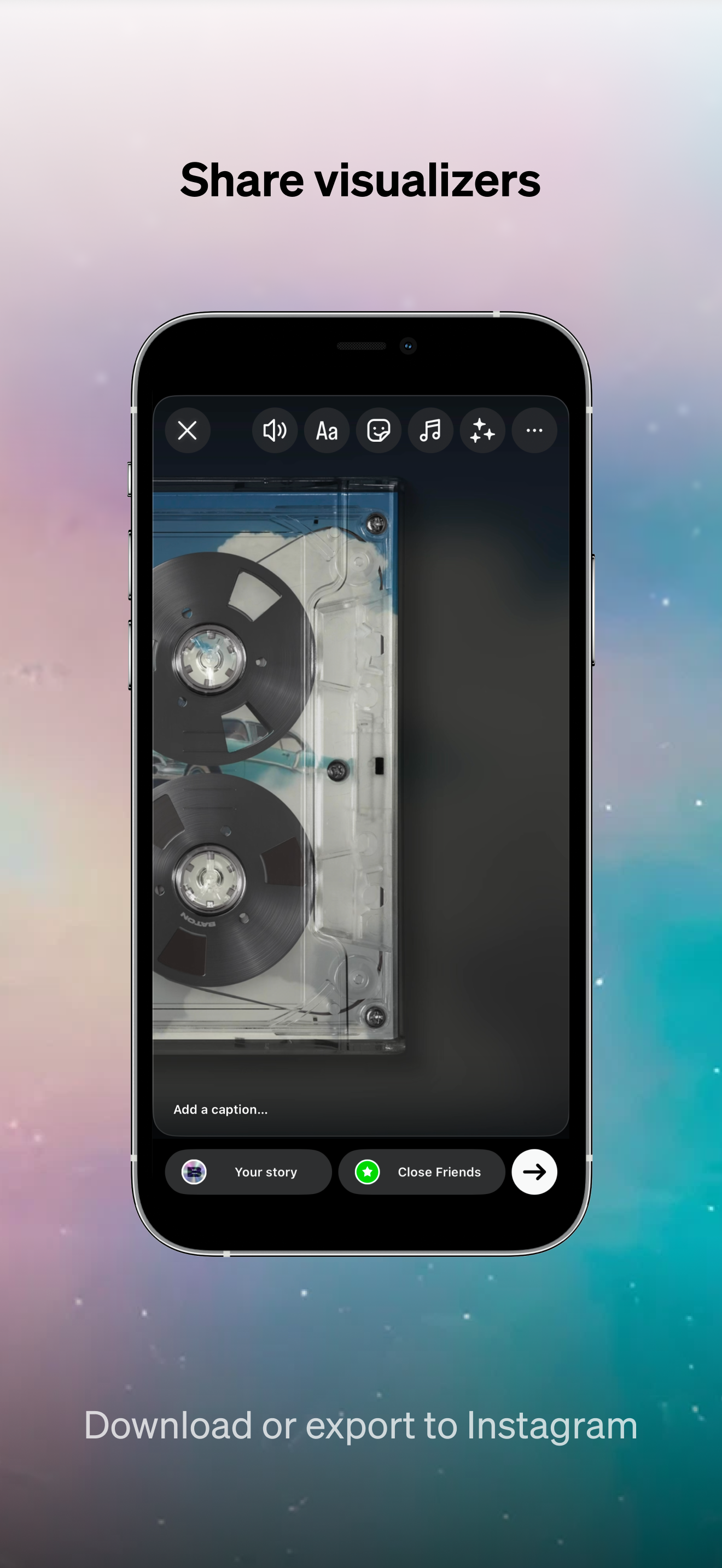

Baton - Music Collaboration App

JAN, 2024 - PRESENT

Baton is a IOS collaborative music platform that transforms stems, drafts, and works-in-progress into living creative spaces where artists and fans can co-create.

Using real-time audio processing, adaptive mixing tools, and AI-powered musical suggestions, Baton makes collaboration more accessible by reducing the friction between idea and iteration.

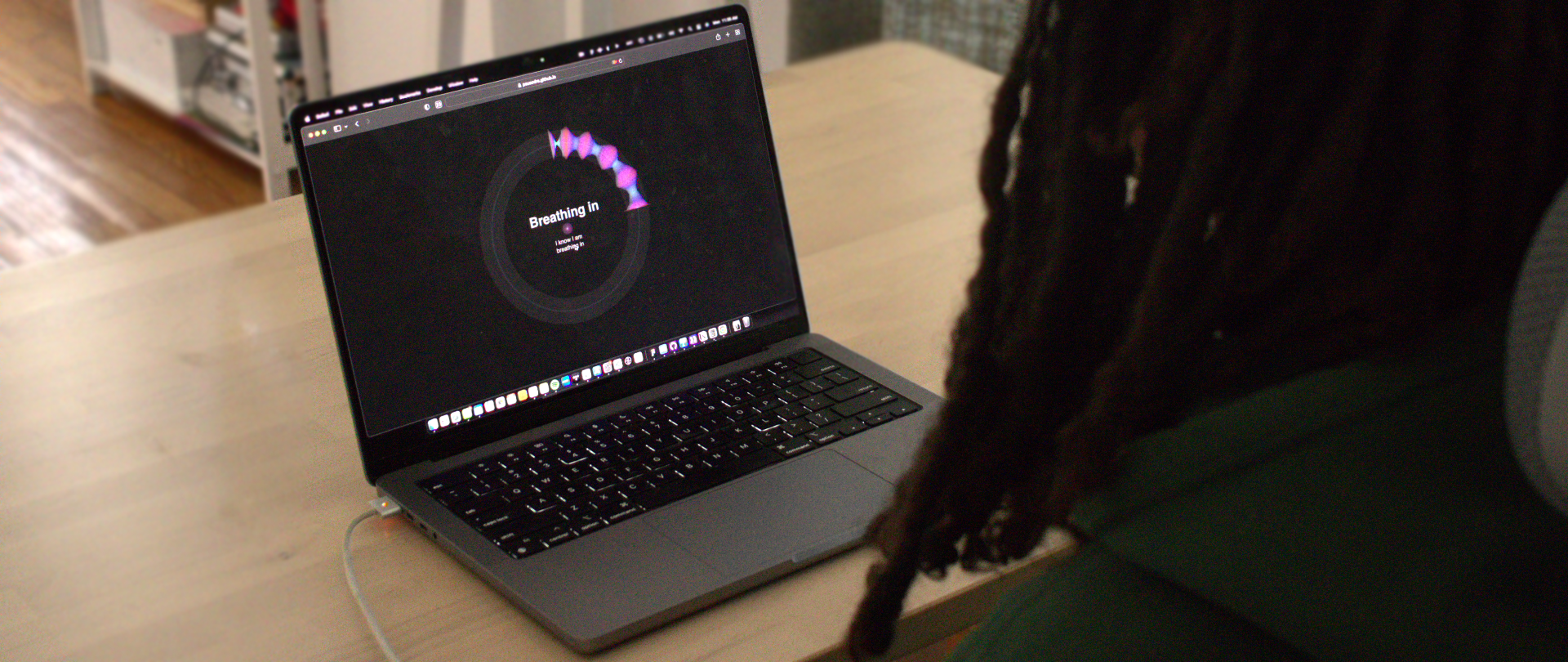

Breath Cycle - Webcam biofeedback + AI guided meditation

MAY, 2023

Breath Cycle is a web-based contactless breath biofeedback system that uses computer vision to monitor and give feedback on breathing patterns, making breath awareness more accessible. In this interactive breath awareness experience, users can visualize their breathing pattern overtime, use GPT to generate personalized meditations and practice physiological coherence by engaging with feedback from a BLE HRV monitor.

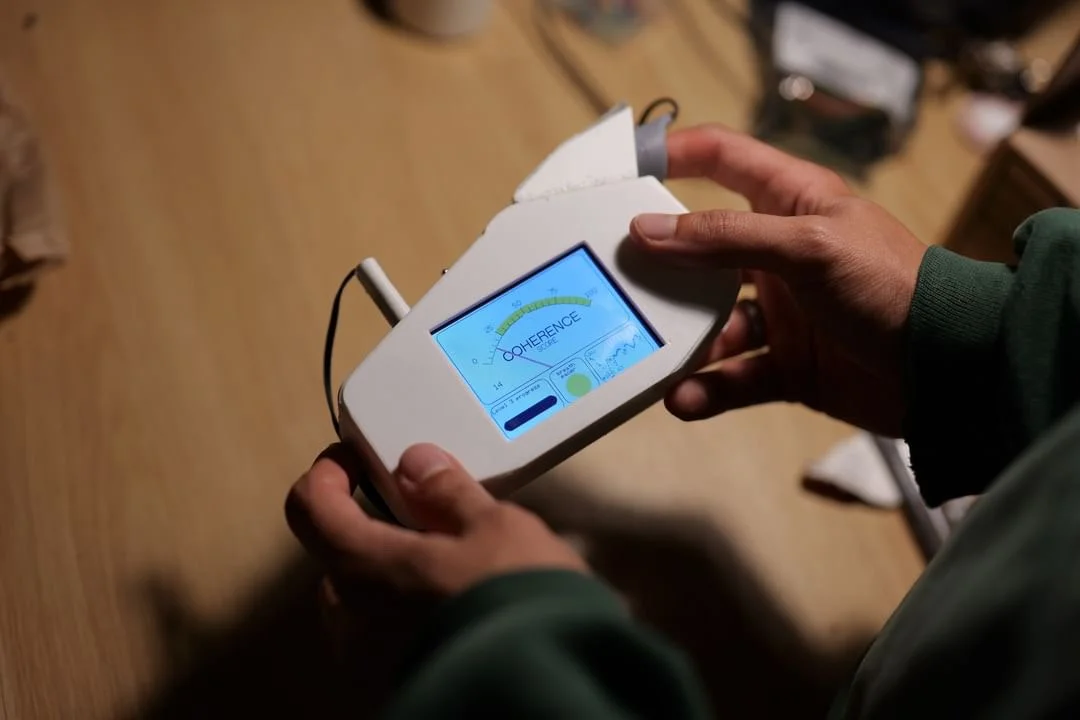

Breath Cycle is a webcam-based breath biofeedback system that can be accessed on any browser. In an effort to make breath-centered meditation more accessible to all, the system's default mode syncs dynamic text, shapes and colors to the user's breathing. At the center of the screen, quotes from from Thich Nhat Hanh's commentary on the Anapanasati Sutra invite the user to follow their inhalations and exhalations while procedurally generated graphics coincide with this movement. Along an outer circle, a radial graph grows complete through the duration of the session, displaying the user’s breathing waveform over time. The contour of the graph and its coloring are modulated by the breath signal, forming a unique visualization every time. The resulting shape can be saved as a .jpeg at the end of the breath cycle session. In addition to the default mode, the system includes two other modes. In the Breath Affirmation AI mode, the system allows users to type in any intention and have a GPT-generated meditation unfold as they breathe in and out. In the Coherence Trainer mode, the user can connect any Bluetooth Low Energy Compliant HRV monitor and have a breath pacer and a percentage coherence score at the center of the screen.

To monitor the user's breathing pattern, the system utilizes a computer vision algorithm based on the scientific paper “Prospective validation of smartphone-based heart rate and respiratory rate measurement algorithms”. The algorithm uses Pyodide to run Python code locally within the web-browser and uses the OpenCV library to analyze the vertical movement of the torso. By estimating a torso region of interest using points from the ML5 face mesh, it calculates a dense optical flow of the area to estimate micro movements in the user's torso, achieving contactless breath monitoring. When engaging with the Breath Affirmation AI, the meditation instructions are generated using OpenAI's DaVinci-002 model with the following prompt: "Generate 12 sequential goal-oriented motivational affirmations. Each should be 5-word to achieve <users intention> and mentally prepare for it." The Coherence Trainer Mode provides the user with a breath pacer and a coherence percentage, which is calculated by identifying a single peak sine wave in the 0.04–0.26 Hz range of the R-R intervals power spectrum. The project aims to release the computer vision breath monitoring tool and BLE heart-rate monitor analysis/web-bluetooth connection as a mindfulness library for p5.js in the near future.

Zen Pet - Heart Rate Variability Biofeedback Toy Developed for NYU Mindful Education Lab

DEC, 2023

ZenPet measures Heart Coherence and uses the power of play to help kids emotionally regulate and establish a meditation habit.

Light Guided Breathwork System - Breathwrk

AUG, 2022

A system that allows teachers to sync audio and lights to breathing exercises.

VR SonicXplorer - Realtime synthesis VR Instrument

MAY, 2021

A light and vibration interface that allows the user to pick different breathing patterns and smoothly interpolate between them in customizable durations.

Pneumatic Breath Guidance Chair

2022

A light and vibration interface that allows the user to pick different breathing patterns and smoothly interpolate between them in customizable durations.

Handheld Haptic Breath Guidance

DEC, 2021

A light and vibration interface that allows the user to pick different breathing patterns and smoothly interpolate between them in customizable durations.

Breathing Edges - Unobtrusive Breathing Intervention

2022

A light and vibration interface that allows the user to pick different breathing patterns and smoothly interpolate between them in customizable durations.

Breathing Interfaces

2022

The Garden - A Prayer Wheel App For Interactive Contemplation

MAY, 2021

The app allows the user to pick from 4 different generative visual and sonic landscapes and turn a prayer wheel with the mantra of Avalokiteshvara.

PERSONAL WORK

OFFERING

NOV, 2020

Offering is a cross-modal sound installation realized as an interactive virtual musical ecosystem. Sonified by physically informed models and experienced in a predefined organization of screen, controller and speaker. The piece is thought of as an open ended artificial ritualistic space.

Three distinct Csound Instruments have been developed as part of this system: a singing bowl model, a metal bar model and a formant synthesis vocal model. In its current format, using a visual system created with the Unity C# API, the user hits the singing bowls causing the other agents to activate consequently producing sound. The decision to develop this system was made as a means to explore a surreal causality in an musical experience that because of the responsiveness of the procedural sound design of the virtual instruments, allows for a unique type of presence.

The sacred iconography and context that traditionally surround the altar was a starting point to propose a surreal audiovisual dreamscape. In this configuration, sound is proposed as the cause for these agents to manifest and the technology is understood as a portal through which the intelligence they represent is reached. The creature portrayed in the center is a direct reference to the work of Ruben Valentin, a Brazilian painter that creates a mixture between modern composition and traditional afro-brazilian symbolism.

About The Implementation. Click to reveal text.

Singing Bowls (click to reveal text)

To implement the singing bowls, a modal synthesis approach was utilized. A recording of a singing bowl was spectrally analyzed and, in order to recreate a convincing sound with a computationally efficient number of modes, the loudest harmonics were selected. The modes were then used as the center frequency of extremely resonant bandpass filters ( the mode opcode in Csound) and a short impulse of pink noise was used as the excitation source of this virtual resonating body.

In the Unity implementation the velocity of the collision of the beater with the singing bowl was used to determine the cutoff frequency applied to the excitation source(pink noise). The biggest the value of the relative velocity the higher the cutoff. With the modal synthesis approach a sustained exaltation source will produce a bowed sound. In order to implement this, another instance of the instrument was created in which the excitation source was not filtered, but the volume of the pink noise signal is multiplied by 1 while the user has the left button of the mouse pressed and the virtual beater colliding with the singing bowl mesh and multiplied 0 if any of these two conditions were not true.

The different sizes of the bowl are the same algorithm but with different base frequencies. This was done by allowing the base frequencies of the bowls and their harmonics to be multiplied by a value declared from within Unity. In the system, the larger the bowl the lowest the number multiplying the modes.

Metal Bars (click to reveal text)

To implement the metal bar creatures a similar modal synthesis approach was taken. With the particularity that in the case of the metal bar creatures implementation each creature is composed of three different resonating bodies running simultaneously. A script verifies the angular velocity of each of the virtual metal blocks that each one of them use the correspondent angular velocity value to control the amount of lowpass of the excitation source. Another script controls the base frequency of each individual bar and as the user hits one of the singing bowls a random number is generated deciding between 3 different chord combinations that the creatures can present.

A significant part of the sonic effect of this sound source is the way by which the blocks collide with one another. Using a procedural tail animation system by Filip Moeglich, the blocks follow an invisible bone structure that is being animated by the tail animator but each one of them has the ability to freely rotate, as if they were floating. This results in a very interesting sonic and visual pattern that can be altered by the parameters that are fed into the procedural animator. What happens when the singing bowls are hitted in this iteration is simple. The variable that controls the amount of movement is altered to a high number and decays over time back to stillness.

Formant Synthesis (click to reveal text)

To implement the vocal creature a different technique was used. Although similar to the modal synthesis approach, the formant synthesis used for the voice works differently in a couple ways. First a chart with the center value of the formants associated with a specific vowel were identified using a pre assembled chart, then the values were also used as the center frequency of band pass filters, but this time with much lower resonance values. Instead of a non pitched sound as an “excitation source” the modes are fed with a sawtooth wave. A similar interpolating system that allows arrays containing the modes to be interpolated as used in the Metal Block Creatures is employed here, but instead of different instruments the arrays contain values for different vowels. The orientation of the crown of the creature is then used to control different parameters of the synthesizer. The angle offset in the X axis is used to control the pitch of the sawtooth wave being sent into the modes, the Y And Z axis controls the interpolation of the vowels.

In an effort to transform this system into a complete realized sound installation two developments are being pursued. The first one is to create a context in which this experience will be experienced in. Right now, the beater is controlled using a mouse but an straight ahead process would allow the beater to be controlled by a leapmotion with the use of simple OSC tools. The second aspect that is being sought after is the speaker configuration. The Singing Bowl is able to produce very low frequency drones. A substantial experience can be achieved is these drones are not only heard but felt with the whole body.

CAUSAS

NOV, 2020

This compositional experiment relates 3 distinct aesthetic worlds: The stochastic and game theory propositions for sound organization of Xenakis, Ian Chang’s live simulation and Wording parameters and the Naif, mythological wood carving sculptures of Veio.

Causas is a cross modal soundscape ecosystem by composer Pedro Sodre. The system was built using the C# API of the Unity Game Engine in conjunction with a packaged version of CsoundUnity and a collection of concrete sound recordings. This musical system explores an acousmatic sound world in an expanded media context. Causas is interested in creating surreal material correlations between virtual causes and sonic effects. Incorporating sacred iconography and Brazilian naif aesthetics that connects to traditional woodcarving as its visuality, the system encourages the experiencer to infer and familiarize themselves with the symbolic relations performed by the intelligences embodied in the spatialized agents.

The work is constructed as an effort to sonify the ideas and practices described by Ian Cheng in his Bag of Beliefs and Emissaries series as well as in his "The Emissaries Guide to Worlding'' book . The contemporary music and sound composition system explores the concepts of density over this potentially infinite musical form, sonic landscapes, spatial ocorrencies and implied physics in sound objects.

We see the confluence of micro and macro sound gestures organically manifesting themselves as the simulation progresses. A first layer of development comes as the virtual listener point of reference changes following the camera as it orbits around the environment and sounds become closer or more distant, alternating between being positioned on the left and on the right. Another layer of development comes through the day and night states, as agents collide with the glowing cubes placed on the environment the sky changes up until when it becomes night. In the night time the agents go to their respective areas. And after sometime into this section, at the center of the systems a new Tetragram will be called, also playing a sound that relates to the archetype that particular configuration represents. Another development tool is manifested by the fact that the system develops the species and their sound might become fewer in number therefore making a sound become less prominent.

In the Buddhist tradition, it is thought that phenomena can be abstracted into the 5 basic elements( in the simulation, space( the 5th element) is not represented by a specific agent). With this in mind each of the 4 main agents sounds are designed to embody the archetypes associated with one of these elements. In general terms the sonic environment features a procedurally generated singing bowl used to sonify the Tetragram movements, concrete sounds from different libraries such as water, fire. wind and metal and an real time Csound algorithmic additive instrument, spectrally modeled after a whistle that increases and decreases in density and duration as the number of the different types of agents changes. Causas might be a glimpse into a future in which composition looks and sounds more like gardening.

PROCESS

ACOUSMATIC COMPOSITIONS

PORTALS

APR, 2020

Portals is a 1:51 minutes acousmatic piece. Inspired by the works of Richard Devine in his “Creatures”EP,

“Portals” explores sound textures, densities over the form and movement on the stereo field. The piece has one main section and a coda, during the first one the piece evolves as sine-like tones sporadicly come into and out of scene while a multitude of sonic objects ,each with a different implied masses, sizes and materials hover and cross the sonic landscape. This sections finishes as the sounds suddenly dissolve, silence follows and then the coda section begins, a 30 seconds noisy crescendo with spatialized staccato attacks that ends suddenly at its climax. The piece was done in its entirety using sounds created with the MakeNoise modular synthesizer that were then, with a plate reverb simulation and filtering, shaped in the form of the piece.

TRANSMIGRATION

JUL, 2020

Transmigration is a 1:38 minute acousmatic piece. The sounds were realized using Csound generative instruments and a 555 based circuit bent synthesizer.

The piece was inspired by Penderecki’s and Richard Devine’s work. Throughout the unfolding of the piece synthetic tones presented with glissandos or with short duration with a percussive quality envelop are superimposed on top of string like grains and metallic like scrapping (done with waveguides).

Dealing with the empirical situation of the built instruments, a certain type of respect to way the sounds captured from the built synthesizer and the built algorithms manifest as a type of rawness. The sonic and compositional gestures points toward actions with non-programmatic unfolding and a general sense of navigating the unexpected.

AYANAMI

SEP, 2020

"Ayanami, Why Do You Pilot Your Eva?" is a 3:47 long acousmatic composition by composer Pedro Sodré.

The piece was realized by applying DSP to a short sound capture containing only the Japanese equivalent of the title phrase. It is a quote from the contemporary classical Japanese animated TV series Evangelion. The piece portrays an abstract soundscape, structured as 30-45 seconds miniatures that evolve through in abstract fashion with the use of several different spectral processors. The piece uses standard Ableton VST such as compression, overdrive, EQ, a plate reverb as well as Csound Shimmer Reverb, Csound Mincer, Csound PVSBlur, a little bit of Csound Spectral Morphing and the Paul Stretch VST.The piece is conceptually rooted into the perspectives brought by Takashi Murakami's Super Flat Manifesto. The written work points to the global phenomena of a cultural flattening of high and low culture, erotic and grotesque, artistic practices and entertainment, childlike and mature themes.

ONLINE INTERACTION SYSTEMS

CONNECT

JUL, 2020

Connect, a child friendly interactive music system that allows users in different parts of the world with no necessary musical background to move characters on a color block based abstract landscape and produce musical interactions by controlling parametric music systems running inside of Unity.

FORMAS LIVE PERFORMANCE

JUL, 2020

Forms was a live streamed commissioned performance by Centro Cultural Paschoal Carlos Magno in which 4 musicians each control a different 3D geometry as part of an Aleatory acousmatic composition.

Both of these systems were designed using NormCore for the multiplayer capacity and CsoundUnity for real time procedural sound design directly inside of Unity. A variety of different sound synthesis and generative algorithms were designed. For the sound of the controllable characters it involved mapping the left and right position on the screen to panning, up and down to filter and note range and mapping the distance from the center to note density. For the sound of the interaction sounds in Connect they were triggered by collision having randomizable envelopes. In the case of Connect all sounds are obtained with simple FM and wavetables while for Formas a waveguide instrument is used. In Connect, the users may interact with one another by colliding or acquiring a different soundPet by interacting with the shapes positioned on the screen. Also in Connect, as the background changes different specializations are introduced to the sounds.

DESIGN & PROTOTYPING

ELEVATION

AUG, 2020

An audio plugin that with the use of resonators, a generative arpeggiator and physically informed models creates an rich ambience generation device that is suited for both contemplative sonic exploration and purposeful musical composing.

GYRO

NOV, 2019

Gyro Sound is an Immersive Experience in which dancers shape music and soundscape through gestures created with the body. The interactive music system allows the users to interact with the soundscape in a spatialize audio environment using data from their phone's gyroscope sensors.

BIRDS SYSTEM

AUG, 2020

The work is realized using a custom built generative Csound Additive/Modal Synthesis Orchestra, with its partials modeled after a recording of a human whistle. The algorithmic element of the system is centered around different envelopes that are interpolated at will in order to produce the morphing effect that comes as section changes and a random impulse generator with density control that is used as a rhythmic generator. Currents points in the direction of the different scales in which flows of air produce sounds. The sonic constructions transform continuously into one another, relating to life, nature phenomena and subtle presences.

AURA

AUG, 2020

As part of an exploration on gesture, physically informed models, embedded processors and traditional healing perspectives Aura takes the form of a wand-like self-contained system in which the user uses physical movement to excite a virtual resonating body of a Singing Bowl.

STUDIES ON SOUND + GESTURE

MAY, 2020

is a series of 4 different gesture based interactive music systems each exploring different aspects of the integration of gesture, sound an image. The relation to sound creatures, interacting with physically simulated objects and controlling generative clouds/particles.

CUTTER

AUG, 2020

Cutter, 2020 materializes in the form of a raw mechanical system an exploration of the performance, materials, colors, sound and textures of the ASMR video format.

How does media development that is necessarily associated to our multi sensorial perception apparatus responds to the lack of tactile, olfactory, gustatory and auditory stimuli in our current digital environment?

COLLABORATIONS

LOST CREATURE (VR) - WORK IN PROGRESS

DEC, 2020

Lost Creature (VR) is a cyclical narrative in the form of an interactive VR experience. The experience is based around the users interaction with Tim, a NPC character that uses sound and body language to convey its emotional states. Tim has a clear sense of where he wants the user to go next and is it users role to pilot the boat taking both of then to the locations Tim is intending, while making sure that Tim is feeling okay by interacting with him when needed. When the user meets a turtle he can then musically interact with it by interacting with a interactive music system associated with the collision of the particles.

Featuring: Terry Wang, Daniela Gómez Munguía.

Role: Lead Designer, programmer, 3D Modeling, sound design and audio implementation.

DREAM MACHINE

JUN, 2020

“The dream machine is a journey and a music driven adventure game where players interact with objects, dreamers and dream makers to solve puzzles and discover the story. The game features recorded dialogue combined with real-time performance elements to enable you to complete your journey and experience in the dream machine.”

Featuring: Akito van Troyer ,Nona Hendryx, Chagall, Lori Landay, Chris Lane, Alan Kwan, Luís Zanforlin, Kamden Storm, Ri Lindegren.

Role: Character, Sound and Visual design.

ROSABEGE - “IMAGEM"

2018 - 2020

Featuring: João Rocha, Vitor Milagres, Thiago Fernandes and Caio Jiacomini,

Quase Tudo No Seu Lugar, 2020 - Role: Visual Desing, 3D modelling, Musical Direction, Development.